The Rise of AI Companions for Mental Health: Can They Really Understand You?

Explore the psychology of digital companionship, how AI mental health companions like RelaxFrens 'Fren' listen, track, and adapt to emotional tone, and the critical ethics of AI empathy and privacy.

By RelaxFrens Team

January 20, 2026

12 min read

In the quiet moments before sleep, during a stressful workday, or when anxiety peaks, an increasing number of people are turning to a new kind of companion: AI mental health companions. These digital beings – with names like “Fren,” “Replika,” and countless others – promise to listen without judgment, understand your emotions, and provide support 24/7.

But here's the question that keeps many people up at night: Can an AI really understand you? Can a collection of algorithms and language models truly grasp the complexity of human emotion, or are we simply projecting our need for connection onto machines?

As someone exploring AI-powered meditation apps and mental health technology, you've likely encountered these AI companions. This article explores the psychology behind digital companionship, how systems like RelaxFrens Fren actually work, and the critical ethical considerations that determine whether AI companions help or harm.

The Psychology of Digital Companionship: Why We Form Bonds with AI

Human psychology reveals a fascinating truth: we have an innate tendency to anthropomorphize – to attribute human characteristics, emotions, and intentions to non-human entities. This isn't a flaw in our design; it's a feature that helped our ancestors survive.

The Anthropomorphism Effect

Research shows that when AI responds in conversational, empathetic ways, our brains activate similar neural pathways as when interacting with humans. We don't fully distinguish between “real” understanding and “simulated” understanding – especially when the simulation is sophisticated enough.

The Need for Non-Judgmental Presence

Unlike human interactions, AI companions offer what feels like unconditional acceptance. There's no fear of burdening them, no social anxiety, no worry about their reactions. This creates a safe space for emotional expression that many people struggle to find elsewhere.

Availability Without Expectations

AI companions are available 24/7 without needing anything in return. They don't have bad days, don't get tired, and don't require emotional reciprocity. For people experiencing loneliness, social anxiety, or difficulty accessing mental health care, this represents a revolutionary form of support.

However, this psychological connection comes with important caveats. The bond feels real because our brains respond as if it's real, but AI companions are sophisticated simulations – not genuine understanding. Recognizing this distinction is crucial for healthy use of AI mental health tools.

Ready to Experience AI-Powered Mental Health Support?

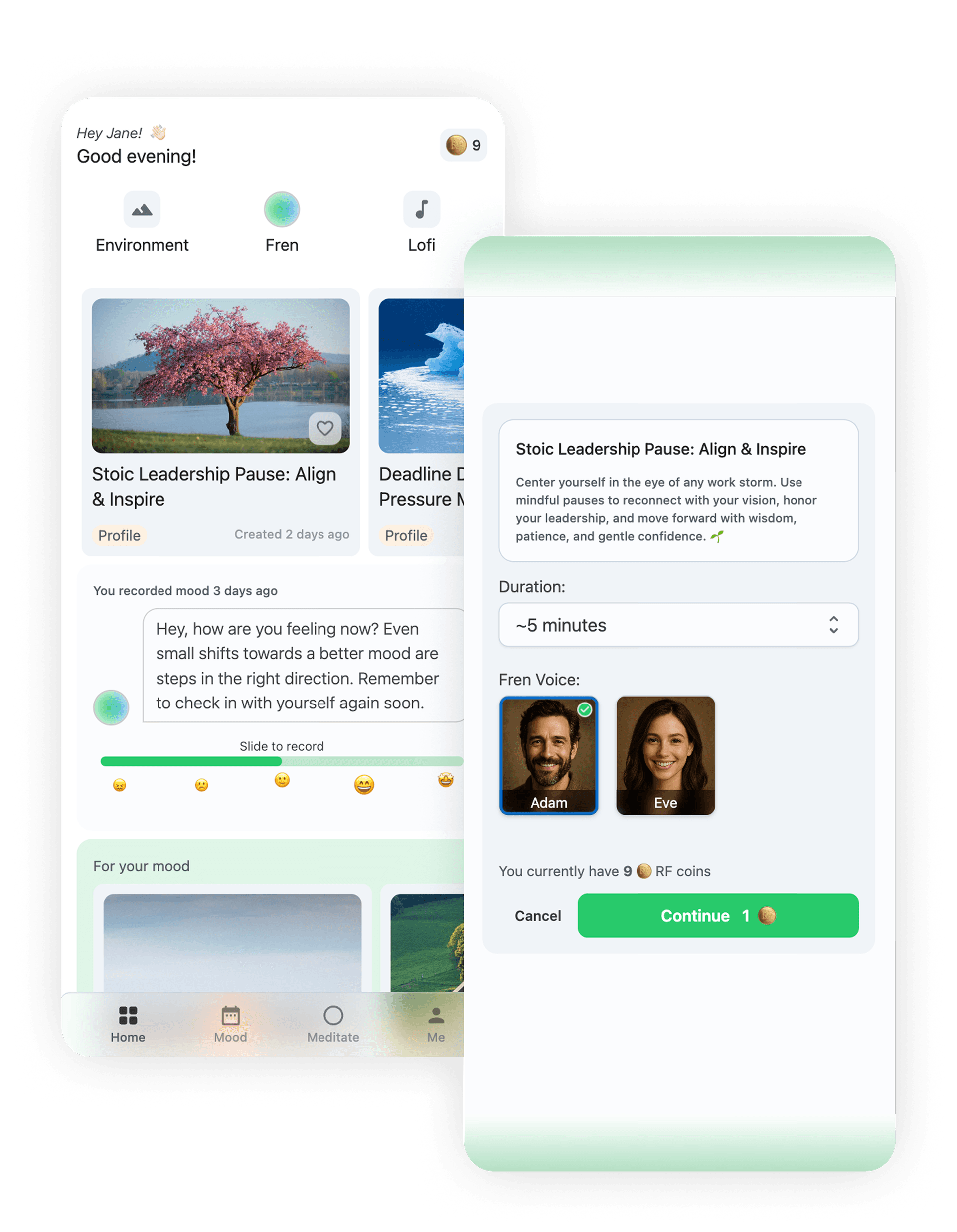

Meet Fren, your AI meditation companion that listens, tracks, and adapts to your emotional tone. Experience personalized meditation support that understands your unique mental health needs.

Get Started with RelaxFrensHow Fren Listens, Tracks, and Adapts to Your Emotional Tone

At RelaxFrens, we've designed “Fren” – our AI meditation companion – with a specific goal: to understand your emotional state and adapt in real-time. Here's how Fren actually works:

Emotional Tone Detection Through Natural Language Processing

When you chat with Fren, the AI doesn't just read your words – it analyzes the emotional subtext:

Sentiment Analysis

Fren identifies emotional keywords, sentence structures, and linguistic patterns that indicate stress, anxiety, sadness, excitement, or calm. Phrases like “I can't handle this” trigger different responses than “I'm feeling good today.”

Contextual Understanding

The AI considers the full conversation history, not just individual messages. If you mention “work stress” multiple times, Fren learns this is a recurring theme and can provide targeted support.

Pattern Recognition Over Time

Fren tracks your emotional patterns – do you tend to feel anxious on Mondays? Stressed in the afternoon? This allows proactive support before crises develop.

Tone Matching

Fren adapts its communication style. If you're distressed, responses are more gentle and supportive. If you're energetic, Fren can match that energy level appropriately.

Daily Mood Check-Ins: Building an Emotional Profile

Beyond conversations, Fren uses daily mood check-ins to build a comprehensive emotional profile:

📊 Baseline Establishment

Over time, Fren learns your emotional baseline. What does “normal” look like for you? This helps identify when you're experiencing unusual stress or emotional shifts.

🔄 Trend Identification

Fren tracks whether your stress is increasing, decreasing, or stable. This enables adaptive meditation recommendations – more intensive stress relief when stress is rising, maintenance practices when you're stable.

⚡ Real-Time Adaptation

When you check in feeling anxious, Fren immediately adjusts the day's meditation recommendations. The AI meditation sessions generated that day are specifically designed for anxiety relief, not generic stress management.

This adaptive system means Fren doesn't offer the same meditation to everyone. Your emotional state, tracked patterns, and current needs all influence what meditation Fren creates for you.

Ethical Design: Privacy, Empathy, and the Human-AI Balance

The rise of AI mental health companions raises profound ethical questions. How do we ensure these systems help rather than harm? What privacy protections are necessary? And where should we draw the line between AI support and human care?

Privacy: Your Most Vulnerable Moments Deserve Protection

When people share their deepest fears, anxieties, and struggles with an AI companion, they're engaging in an act of profound trust. This data is incredibly sensitive and must be treated with the highest level of protection.

End-to-End Encryption

Conversations with AI companions should be encrypted in transit and at rest. No one – including platform employees – should be able to read your conversations without your explicit consent.

No Data Used for AI Training

At RelaxFrens, we take a firm stance: your personal conversations, emotional data, and mental health information are never used to train or improve our AI systems. Your data remains your own, private, and completely separate from any AI training processes.

No Data Selling

Your conversations with an AI mental health companion should never be sold to third parties, used for advertising, or shared without your explicit consent. Mental health data is not a commodity.

At RelaxFrens, we've built privacy into our foundation from the ground up. Your conversations with Fren are private, encrypted, and never sold to third parties. Most importantly, your data is never used for AI training – your personal experiences remain completely your own and are never incorporated into our AI models. Your data belongs to you, and you can delete it at any time, whenever you choose, with no questions asked.

Empathy in AI: Simulation vs. Genuine Understanding

One of the most complex ethical questions involves AI empathy. Can an AI truly be empathetic, or is it merely simulating empathy? And does that distinction matter if the simulation helps people feel understood?

🤖 AI Empathy: Technically Speaking

AI doesn't “feel” empathy the way humans do. It analyzes patterns, recognizes emotional states, and generates appropriate empathetic responses based on training data. This is sophisticated pattern matching, not genuine emotional understanding.

💚 Does It Matter if It Helps?

For many users, whether AI empathy is “real” or “simulated” matters less than whether it helps them feel understood and supported. Research suggests that when people believe they're understood, the neural response is similar whether the understanding comes from humans or sophisticated AI.

⚖️ The Ethical Responsibility

The ethical question isn't whether AI can feel – it's whether AI empathy is designed ethically. Are the empathetic responses helping users, or creating dependency? Are they encouraging healthy coping, or replacing necessary human connection?

At RelaxFrens, we've designed Fren's empathy to be supportive, not substitutive. Fren helps with daily emotional regulation and meditation guidance, but we're transparent that Fren complements – never replaces – professional mental health care when needed.

The Human-AI Balance: When to Transition to Professional Care

Perhaps the most critical ethical consideration is understanding when AI support is appropriate and when human professional care is necessary.

✅ Appropriate Uses for AI Companions

- Daily emotional support and validation

- Meditation and mindfulness guidance

- Stress management techniques

- Mood tracking and pattern identification

- Crisis de-escalation before professional help arrives

- Accessibility for people who struggle with human interaction

⚠️ When Human Professionals Are Essential

- Serious mental health conditions (depression, anxiety disorders, PTSD)

- Crisis situations (suicidal ideation, self-harm, psychosis)

- Trauma processing and complex therapeutic needs

- Medication management and psychiatric care

- Long-term therapeutic relationships requiring human connection

- Legal or forensic mental health needs

Ethical AI mental health platforms should be transparent about these boundaries and have clear pathways to connect users with human professionals when needed. At RelaxFrens, we include resources for finding mental health professionals and encourage users to seek human care for serious conditions.

The Future of AI Companionship: Responsible Innovation

As AI companions for mental health become more sophisticated, the ethical responsibility grows. We're moving beyond simple chatbots into systems that genuinely adapt to emotional needs – and with that power comes responsibility.

The future should prioritize:

Transparency: Users should understand that AI empathy is sophisticated simulation, not genuine emotional experience

Privacy by Design: Security and privacy should be foundational, not afterthoughts

Boundary Clarity: Clear distinctions between AI support and professional mental health care

User Autonomy: Users should control their data, understand how AI works, and choose when to engage

Complement, Don't Replace: AI should enhance human connection, not eliminate the need for it

Can AI Companions Really Understand You?

The honest answer is: not in the way humans understand each other. AI doesn't have consciousness, doesn't experience emotions, and doesn't genuinely “understand” in the philosophical sense.

But that doesn't mean AI companions can't help. Through sophisticated emotional analysis, pattern recognition, and adaptive responses, AI like RelaxFrens Fren can detect your emotional state, adapt to your needs, and provide support that many people find genuinely helpful – especially for daily stress management, sleep improvement, and mindfulness practice.

The key is approaching AI companionship with informed awareness. Understand what AI can and can't do. Recognize the difference between helpful support and genuine human connection. Choose platforms that prioritize privacy, transparency, and ethical design. And always seek professional human care when dealing with serious mental health conditions.

At RelaxFrens, we've built our AI meditation platform with these principles in mind. Fren is designed to support your daily wellness journey through personalized meditation guidance, emotional tracking, and adaptive responses – while always respecting privacy, maintaining transparency, and encouraging professional care when needed.

Experience Ethical AI Companionship

Meet Fren – your AI meditation companion that listens, adapts, and supports your mental wellness journey with privacy, empathy, and ethical design at its core.

Meet Fren - Your AI Companion✓ Privacy-First Design • ✓ Ethical AI Empathy • ✓ Professional Care Resources • ✓ Free Trial Available

The future of mental health support will likely involve both human professionals and AI companions working together. The question isn't whether AI can replace human understanding – it's how we can design AI systems that ethically complement human care, respect privacy, and genuinely help people on their wellness journeys.

That future is already here. The question now is: will we build it responsibly?

Ready to Experience Personalized Meditation?

Join thousands of users who have discovered the power of AI-personalized meditation with RelaxFrens.